AI-powered visual search arrives on the iPhone

Here is the rewritten article following the specified rules:

# Apple Introduces Visual Search for iPhone, Enhancing AI Capabilities

**Introduction**

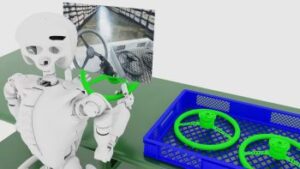

Apple's latest innovation brings us closer to a future where visual search becomes an integral part of everyday life. At the heart of this update lies **Apple Intelligence**, a suite designed to revolutionize how users interact with their devices. With the release of the iPhone 16 and 16 Plus, Apple has unveiled the **Camera Control** button, serving as the gateway to **Visual Intelligence**—a feature poised to transform how users search for information.

## The Birth of Visual Search

Apple announced **Visual Search** at its 2024 event, marking a significant leap in its AI-driven ecosystem. This new feature combines **reverse image search** with text recognition, offering functionalities akin to Google's Vision API but within Apple's native app experience.

### How It Works

For users seeking restaurant information, a simple search can pull up details such as hours, ratings, and menu options directly from their phone. Similarly, encountering an event flyer could trigger a quick calendar entry for the event's specifics like time, date, and location.

**Visual Intelligence** leverages Google Search to process images, ensuring data accuracy while respecting user privacy. One unique feature is its integration with **ChatGPT**, accessible via Apple's partnership with OpenAI. This allows users to query details about anything, from homework assignments to event planning, by merely pressing the camera button on their iPhone.

### Integration with Apple Services

The integration of Visual Search with other Apple services, such as Photos and iWork apps, promises seamless data handling. Apple has made it clear that **Visual Intelligence** will respect user privacy, avoiding overuse of location or device information unless explicitly requested through app permissions.

## Launch Details and Beta Testing

Pre-beta access is limited to a select group of users in the U.S., with plans for wider release in late 2023. Beta testers can expect initial issues related to image processing speed and integration across multiple apps, which Apple aims to refine based on feedback.

### The Team Behind It

Kyle Wiggers, a seasoned journalist with a background in technology news, will be your guide through this feature's unveiling. His expertise spans the intersection of AI, hardware, and user experience, providing insights into how Visual Search fits into the broader ecosystem.

## Conclusion

Apple's Visual Search is more than just an update; it represents a future where visual data processing becomes as intuitive as voice or gesture commands. With features like **ChatGPT** integration and seamless app integration, users can expect a tool that enhances productivity and daily interaction with their devices.

### About Kyle Wiggers

Kyle Wiggers, a tech journalist with a deep dive into AI trends and consumer electronics, brings his expertise to dissect Apple's latest offerings. His work often explores the convergence of hardware and software in shaping user experiences, offering insights that resonate beyond the immediate product release.